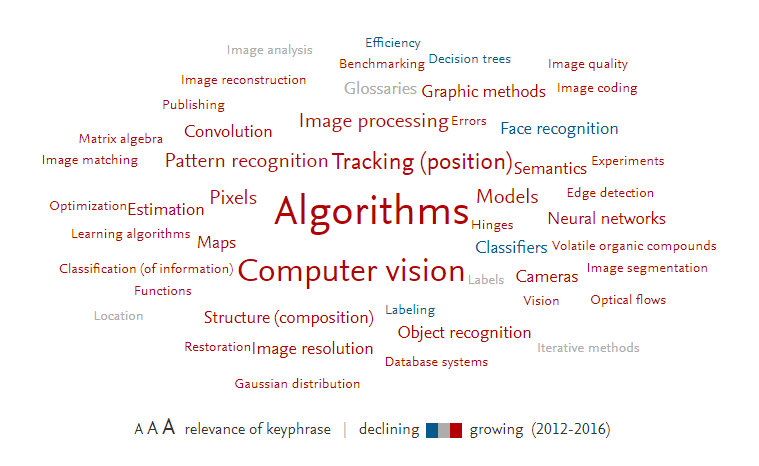

DGIF 2017 정보통신융합전공은 "Visual Intelligence"라는 핫한 주제로 10명의 연사들의 강연이 마련되어 있습니다. 캘리포니아 메르세드대학의 Ming-Hsuan Yang 교수님이 "Recent Results on Image Editing and Learning Filters"를 주제로 발표합니다. FWCI 10이상으로 논문 영향력이 어마어마 하신 분의 연구는 어떨까요? 관련 논문과 책 등을 미리 읽어보시고, Computer Vision을 연구하시는 DGIST 곽수하, 조성현 교수님의 강연도 경청해주세요!

* 불법, 상업/광고, 욕설 등의 댓글은 삭제될 수 있습니다.